You can now find the presentation slides from our seminar series on this page. Thanks to everyone who attended!

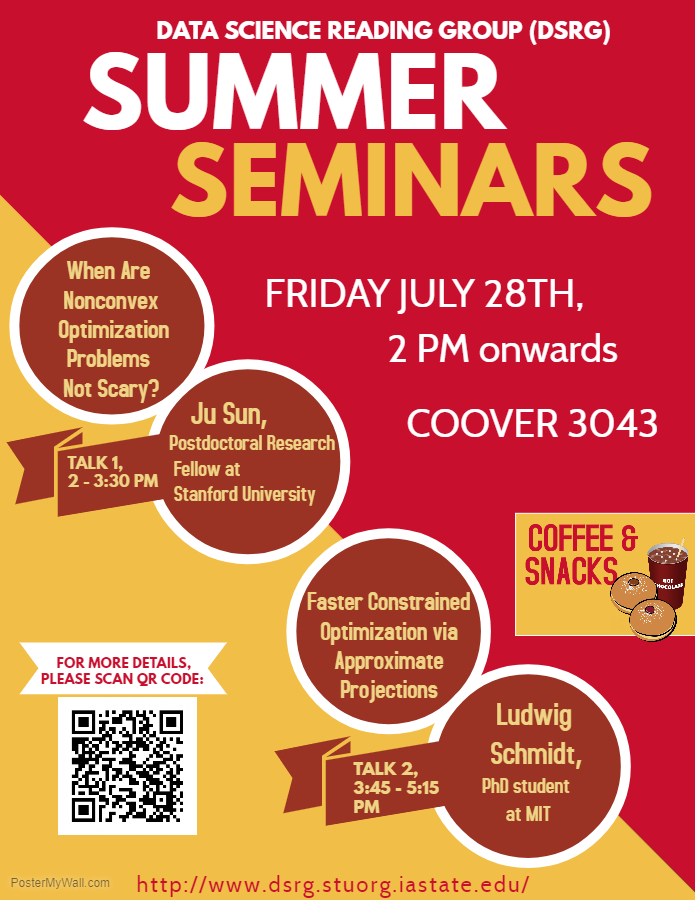

Summer Seminar – 7/28/2017

Summer Seminar

With the ILAS 2017 meet going on at Iowa State University, we had the privilege of inviting two young researchers to give a talk to our audience.

Details of the first talk are as follows:

Date: 28 Jul 2017

Time: 2:00 PM – 3:30 PM

Location:

3043 ECpE Building Addition

Speaker: Ju Sun, Postdoctoral Research Fellow at Stanford University

Title: “When Are Nonconvex Optimization Problems Not Scary?”

For more details, check the department website.

Details for the second talk are as follows:

Date: 28 Jul 2017

Time: 3:45 PM – 5:00 PM

Location:

3043 ECpE Building Addition

Speaker: Ludwig Schmidt, PhD student at MIT

Title: Faster Constrained Optimization via Approximate Projections (tentative)

Refreshments (food and coffee) will be provided! Join us!

Weekly Seminar – 4/14/2017 – Low Rank and Sparse Signal Processing #4

Weekly Seminar – 3/31/2017 – Low Rank and Sparse Signal Processing #3

References:

Weekly Seminar – 3/24/2017 – Low Rank and Sparse Signal Processing #2

1. David I Shuman et al. “The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains”. In: Signal Processing Magazine, IEEE 30.3 (2013), pp. 83–98.

2. A. Sandryhaila and J.M.F. Moura. “Discrete Signal Processing on Graphs: Frequency Analysis”. In: Signal Processing, IEEE Transactions on 62.12 (2014), pp. 3042–3054.

3. David I Shuman, Benjamin Ricaud, and Pierre Vandergheynst. “Vertex-frequency analysis on graphs”. In: Applied and Computational Harmonic Analysis 40.2 (2016), pp. 260–291.

Slides: GSP